Which type of security assessment provides the most accurate and dependable results?

You should certainly expect when a security assessment is delivered that the majority of security vulnerabilities and hopefully all critical and high-risk weaknesses will be identified at the time of testing. This is a fair ask.

When a threat actor goes poking around your systems you can be sure they are doing the same, looking for an easy “way in” so they breach and pivot within your system with an aim to compromise. So, what is the best approach when it comes to visibility and accuracy?

Assessment options fall into two recognized categories with a third I would like to present for consideration:

Two Recognized Categories

1. Automation – Software testing tools

2. Manual Assessment – Combination of tools, scripts, and human expertise.

A solution to consider:

Hybrid Solution – Automation + Human Intelligence + Analytics

| Option 1: Automation/Software Testing Tools | Option 2: Manual Assessment | Option 3: Hybrid Solution | |

| Strengths |

|

|

|

| Weaknesses |

|

| Not 100% automated? |

Option 1: Automation/Software testing software

Strengths:

- Scale/Volume,

- On-demand, DevOps friendly (speed),

- Continuous,

- Cost effective.

Weaknesses:

- Accuracy and Risk Rating,

- Priority,

- Coverage,

- Depth (Logical vulnerabilities),

- Will always requires expertise to validate output.

Option 2: Manual assessment of a system

Coupling of usage of human intelligence, tools, scripts and expertise.

Strengths:

- Logical issue detection,

- Accurate / (should be) False positive free,

- Complex exploit detection, human curiosity (never underrate this),

- Contextual awareness (aids priority of remediation).

Weaknesses:

- Not scalable,

- Expensive,

- Not on-demand,

- Does not fit with DevOps etc,

- Point-in-time scan,

- No Metrics?!

Option 3: Hybrid Solution – Augmented with Expertise

Strengths:

- Complex issue detection,

- Logical exploits discovery,

- False positive Free / Accurate

- Prioritized

- Scale/Volume, On-demand,

- DevOps friendly,

- Coverage, Metrics, Support.

The Shoot Out

Automation and Testing Tools Vs. Manual Assessment

For the point of this article let’s talk about options 1 & 2 above. If I talked about option 3 it might turn into a sales pitch as that is what Edgescan delivers and its worthy of a blog post on its own.

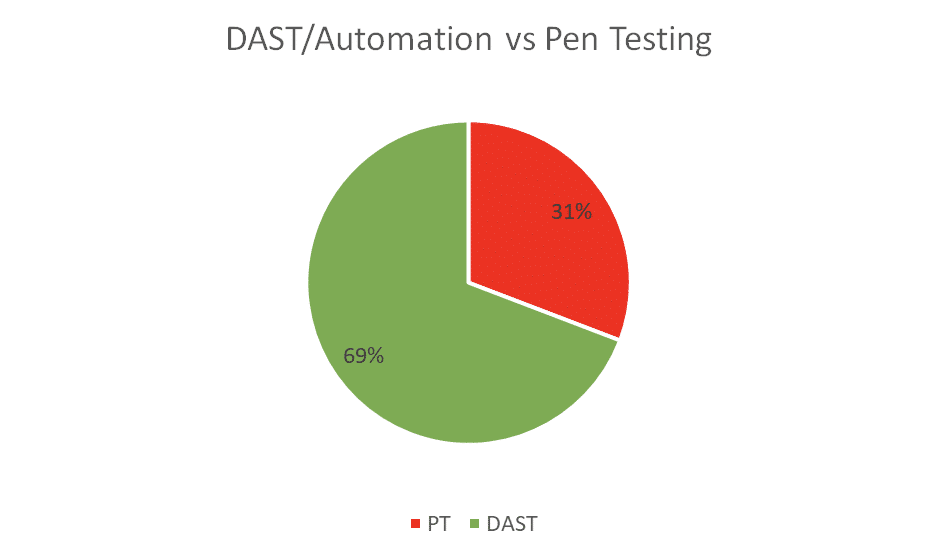

We launched our 2023 Vulnerability Statistics Report which is compiled using data from thousands of vulnerability assessments and penetration tests over the past 12 months (to December 2022). One of the areas we focused on was evaluating results from an “automation Vs manual” standpoint and then mapped which “high and critical severity vulnerabilities“ were more than likely detected using automation vs. which require human know-how.

The results are interesting in that automation tools (Scanners/DAST) detected 69% of the known critical and high severity vulnerabilities but missed the other 31%.

In my 20 years’ experience the best (and most fun) vulnerabilities are related to poor authentication, poor authorization, or broken business logic.

I’ve personally breached and compromised entire banks, ministries of finance and global enterprises via poor authorization and authentication controls. Both of which are not easily detected via automation.

“Authorization is contextual; it is based on both the model and business process, which is unique per organization. Because of this, it is not a good candidate for automated assessment as tools don’t understand risk or context.”

We decided to do this as a “thought leadership” exercise to convince folks that reliance on software-testing-software for vulnerabilities does not work alone – it’s like buying a shiny new electric drill. We are really buying the holes it makes and to make nice holes we need a competent user. The drill alone does not do very much.

The same is true when we conduct a cyber assessment – what we should really care about is the output it delivers, and does the approach to getting to that result provide reasonable assurance?

High and Critical Severity Vulnerabilities typically not found by automation:

| Account Hijack vulnerability | Malicious File Upload |

| Bypass Client-Side Controls | Meta data found in files |

| Bypass Security Question | Meta data in PDF |

| Concurrent Logins Permitted | Multi Factor Authentication Not Enforced |

| Error Handling (complex variants) | Password Policy Not Enforced Server-Side |

| Excessive Permissions / Authorization weakness | Password Reset Token Not Invalidated |

| Hard-coded Credentials (various) | Security Question Not Enforced |

| Information Disclosure (Contextual) | Session Fixation |

| Insecure Binary Code Functions | Session Hijacking |

| Insecure password change functionality | Unauthenticated Access to Sensitive Resource |

| Insufficient Authorization | Unrestricted File Upload |

| Lack of anti-automation | User Enumeration |

| Lack of Multifactor Authentication | Weak Password Policy |

Conclusion:

From the data (pictured in the chart and table above) we can see that over 30% of high and critical severity vulnerabilities are not detected using automation alone. Sure, we can run automated cyber security assessments quickly, its costs relatively little (apart from the expertise to validate and prioritize the discovered issues) but we’re looking at attack surface blind spots amounting to circa 30% of all potential vulnerabilities to be discovered.

Automation alone, on average, only discovers 69% of all vulnerabilities.

We have a 31% coverage blind spot with automation alone.