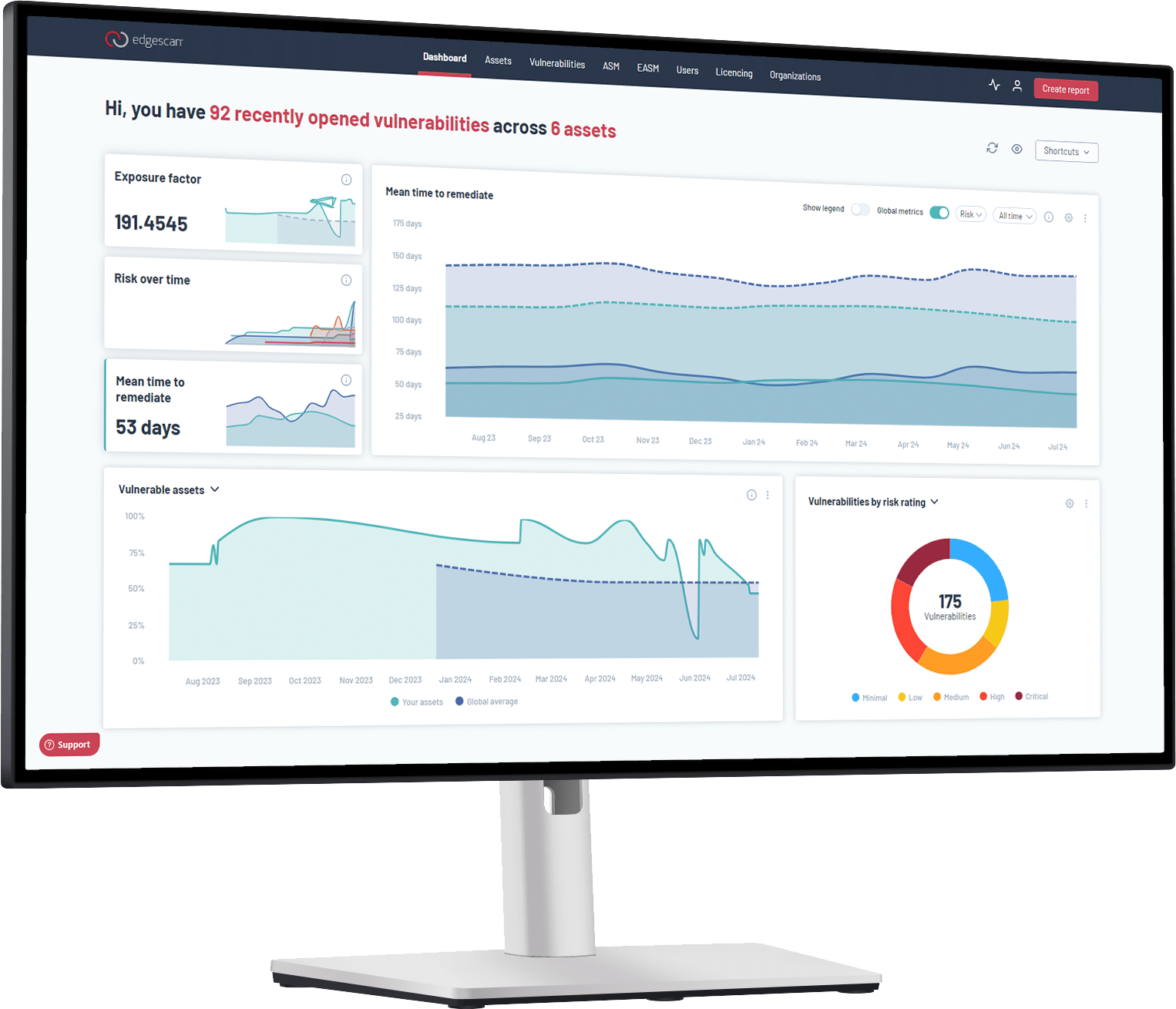

After discussing CTEM (Continuous Threat and Exposure Management) and ASPM (Application Security Posture Management) recently with some noted industry analysts, the majority stated their clients are faced with the same challenges year on year which still have not been solved.

As the cyber industry pushes ahead, issues like accuracy appear to be ignored by the market as they appear too complex to solve. Edgescan has attempted to solve this issue over the past 5 years by building a data lake of triaged vulnerabilities and using a combination of clever technology and data science to automate validation of vulnerabilities. Our approach has resulted in near false positive-free vulnerability intelligence with approximately only 8% of discovered vulnerabilities requiring manual inspection by our team.

Reasons for false positives

Despite advancements, vulnerability scanners can still produce false positives (flagging non-issues as threats). Automated scanning accuracy remains a significant challenge in cybersecurity. Here are a few reasons why:

Complex Environments: Modern IT environments are highly complex with a mix of on-premises, cloud, and hybrid systems. Ensuring accurate scans across these diverse environments is challenging, and most point-and-click solutions do not provide the coverage required to help ensure adequate coverage is achieved.

Configuration Issues: Incorrectly configured scanners can miss vulnerabilities (false negatives) or generate inaccurate results (false positives). Regularly fine-tuning and updating scanning configurations is a key component that is commonly overlooked and takes effort.

Ecosystem Integration: Ensuring that vulnerability scanners integrate seamlessly with other security tools and processes can affect accuracy. Poor integration can lead to gaps in coverage and missed vulnerabilities. “Wiring” separate tools together is never as simple as it may appear.

The impact of inaccuracy cannot be underestimated, and if addressed, significantly improves efficiency and reduces friction in any vulnerability management or CTEM process.

False positives impact organisations in a number of ways, detailed below. It’s worth considering how you are addressing this elephant in the room…

Resource Drain: Security teams spend valuable time and effort investigating and remediating non-existent vulnerabilities. This not only wastes resources but also diverts attention from improving security posture. Resource drain also leaks into development teams’ capacity undermining trust and the value cyber security teams deliver to development.

Alert Fatigue: Constantly dealing with false positives can lead to “alert fatigue.” Alert fatigue is where security professionals (and possibly developers) become desensitized to alerts. Think, “the boy who cried wolf.” This increases the risk of genuine threats being overlooked due to a continuous deluge of noise. Vulnerability validation suppresses “noise.”

Operational Disruption: False positives can disrupt daily operations, forcing developers and security teams to halt their regular tasks to address these misleading alerts. Development is all about developing new products. Being faced with false positives wastes time, distracts from real issues, undermines trust, generates unneeded noise, and is generally a negative disruptor.

Reduced Efficiency: The need to manually review each false positive can slow down automated processes and reduce overall efficiency. The requirement to review every output from a continuous exposure/posture management pipeline goes against the flow of such a system and introduces friction.

Impact on Morale: Continuous and persistent false positives can lead to frustration and decreased motivation among security team and product development members, affecting team advancement and overall productivity.

Prioritization: It is pointless to apply priority meta-data to vulnerability intelligence if it contains errors and false positives. It simply amplifies the issue and makes the problem worse, raising the alarm needlessly for non-issues.